Decompression Tables

Decompression in the context of diving derives from the reduction in ambient pressure experienced by the diver during the ascent at the end of a dive or hyperbaric exposure and refers to both the reduction in pressure and the process of allowing dissolved inert gases to be eliminated from the tissues during this reduction in pressure.

When a diver descends in the water column the ambient pressure rises. Breathing gas is supplied at the same pressure as the surrounding water, and some of this gas dissolves into the diver’s blood and other tissues. Inert gas continues to be taken up until the gas dissolved in the diver is in a state of equilibrium with the breathing gas in the diver’s lungs, (see: “Saturation diving“), or the diver moves up in the water column and reduces the ambient pressure of the breathing gas until the inert gases dissolved in the tissues are at a higher concentration than the equilibrium state, and start diffusing out again. Dissolved inert gases such as nitrogen or helium can form bubbles in the blood and tissues of the diver if the partial pressures of the dissolved gases in the diver gets too high when compared to the ambient pressure. These bubbles, and products of injury caused by the bubbles, can cause damage to tissues known as decompression sickness or the bends. The immediate goal of controlled decompression is to avoid development of symptoms of bubble formation in the tissues of the diver, and the long-term goal is to also avoid complications due to sub-clinical decompression injury.

The symptoms of decompression sickness are known to be caused by damage resulting from the formation and growth of bubbles of inert gas within the tissues and by blockage of arterial blood supply to tissues by gas bubbles and other emboli consequential to bubble formation and tissue damage. The precise mechanisms of bubble formation and the damage they cause has been the subject of medical research for a considerable time and several hypotheses have been advanced and tested. Tables and algorithms for predicting the outcome of decompression schedules for specified hyperbaric exposures have been proposed, tested, and used, and usually found to be of some use but not entirely reliable. Decompression remains a procedure with some risk, but this has been reduced and is generally considered to be acceptable for dives within the well-tested range of commercial, military and recreational diving.

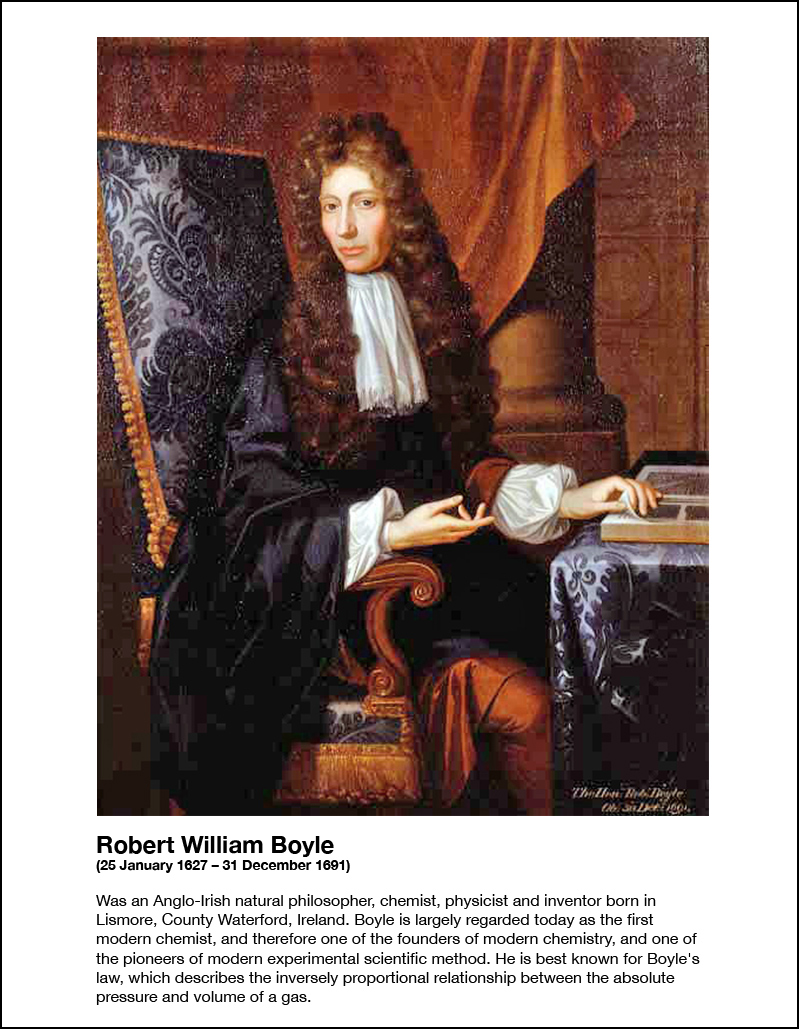

The first recorded experimental work related to decompression was conducted by Robert Boyle, who subjected experimental animals to reduced ambient pressure by use of a primitive vacuum pump. In the earliest experiments the subjects died from asphyxiation, but in later experiments, signs of what was later to become known as decompression sickness were observed. Later, when technological advances allowed the use of pressurisation of mines and caissons to exclude water ingress, miners were observed to present symptoms of what would become known as caisson disease, the bends, and decompression sickness. Once it was recognized that the symptoms were caused by gas bubbles, and that recompression could relieve the symptoms, further work showed that it was possible to avoid symptoms by slow decompression, and subsequently various theoretical models have been derived to predict low-risk decompression profiles and treatment of decompression sickness.

Haldane’s theory and tables

John Scott Haldane was commissioned by the Royal Navy to develop a safe decompression procedure. The current method was a slow linear decompression, and Haldane was concerned that this was ineffective due to additional nitrogen buildup in the slow early stages of the ascent.[44]

Haldane’s hypothesis was that a diver could ascend immediately to a depth where the supersaturation reaches but does not exceed the critical supersaturation level, at which depth the pressure gradient for off-gassing is maximized and the decompression is most efficient. The diver would remain at this depth until saturation had reduced sufficiently for him to ascend another 10 feet, to the new depth of critical supersaturation, where the process would be repeated until it was safe for the diver to reach the surface. Haldane assumed a constant critical ratio of dissolved nitrogen pressure to ambient pressure which was invariant with depth.[44]

A large number of decompression experiments were done using goats, which were compressed for three hours to assumed saturation, rapidly decompressed to surface pressure, and examined for symptoms of decompression sickness. Goats which had been compressed to 2.25 bar absolute or less showed no signs of DCS after rapid decompression to the surface. Goats compressed to 6 bar and rapidly decompressed to 2.6 bar (pressure ratio 2.3 to 1) also showed no signs of DCS. Haldane and his co-workers concluded that a decompression from saturation with a pressure ratio of 2 to 1 was unlikely to produce symptoms.[45]

Haldane’s model

The decompression model formulated from these findings made the following assumptions.[8]

- Living tissues become saturated at different rates in different parts of the body. Saturation time varies from a few minutes to several hours

- The rate of saturation follows a logarithmic curve and is approximately complete in 3 hours in goats, and 5 hours in humans.

- The desaturation process follows the same pressure/time function as saturation (symmetrical), provided no bubbles have formed

- The slow tissues are most important in avoiding bubble formation

- A pressure ratio of 2 to 1 during decompression will not produce decompression symptoms

- A supersaturation of dissolved Nitrogen that exceeds twice ambient atmospheric pressure is unsafe

- Efficient decompression from high pressures should start by rapidly halving the absolute pressure, followed by a slower ascent to ensure that the partial pressure in the tissues does not at any stage exceed about twice the ambient pressure.

- The different tissues were designated as tissue groups with different half-times, and saturation was assumed after four half-times (93.75%)

- Five tissue compartments were chosen, with half-times of 5, 10, 20, 40 and 75 minutes.[46]

- Depth intervals of 10 ft were chosen for decompression stops.[8]

Decompression tables

This model was used to compute a set of tables. The method comprises choosing a depth and time exposure, and calculation the nitrogen partial pressure in each of the tissue compartments at the end of that exposure.[8]

- The depth of the first stop is found from the tissue compartment with the highest partial pressure, and the depth of first decompression stop is the standard stop depth where this partial pressure is nearest without exceeding the critical pressure ratio.[8]

- The time at each stop is the time required to reduce partial pressure in all compartments to a level safe for the next stop, 10 ft shallower.[8]

- The controlling compartment for the first stop is usually the fastest tissue, but this generally changes during the ascent, and slower tissues usually control the shallower stop times. The longer the bottom time and closer to saturation of the slower tissues, the slower the tissue controlling the final stops will be.

Chamber tests and open water dives with two divers were made in 1906. The divers were successfully decompressed from each exposure. The tables were adopted by the Royal Navy in 1908. The Haldane tables of 1906 are considered to be the first true set of decompression tables, and the basic concept of parallel tissue compartments with half-times and critical supersaturation limits are still in use in several later decompression models, algorithms, tables and decompression computers.

U.S. Navy decompression tables

US Navy decompression tables have gone through a lot of development over the years. They have mostly been based on parallel multi-compartment exponential models. The number of compartments has varied, and the allowable supersaturation in the various compartments during ascent has undergone major development based on experimental work and records of decompression sickness incidents.